CSV VS Parquet

Comma-Separated Values (CSV)

A comma-separated values (CSV) file is a delimited text file in which values are separated by commas. Every line in the file denotes a data record, which is a build-out of several fields separated by commas. This file format was named after the concept used in it, i.e., using the comma as a field separator. A CSV format usually carries tabular data (numbers and text) in plain text, with a similar number of fields on each line. This file format is completely not a standard one. The standard is to use commas to separate fields; however, commas in data and embedded line breaks must be treated differently.

Parquet

Apache Parquet is a generally used column storage file format by Hadoop systems like Pig, Spark, and Hive. This file format is language-independent and features a binary representation. Parquet is used to effectively store huge data sets and has the extension. It is a columnar storage format having the below-mentioned features.

- When opposed to row-based formats for example. CSV, this format is meant to provide efficient columnar data storage.

- This format is built up from the bottom to support complicated, layered data structures.

- This format is designed to allow high-performance compression and encoding techniques.

- This format enables low data storage costs and increased query efficacy when used with server-less technologies such as Amazon Athena, Redshift Spectrum, and Google Dataproc.

Comparison

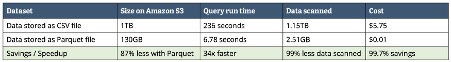

CSV and Parquet file formats are both often utilized to store data. Still, both these file formats are way more different than each other. CSV files are used for row storage, whereas Parquet files are used for column storage. Converting your CSV data to Parquet’s columnar format and then compressing and dividing it will help you save a lot of money and also achieve better performance. The image below summarizes the savings achieved by transforming data into Parquet vs. CSV.

Apache Parquet is intended to be both effective and efficient at the same time. The column storage design is the answer for this, as it allows you to easily skip data that isn’t relevant. This format makes both searches and aggregations quicker as a consequence, resulting in hardware savings. Thus making it a cost-efficient format. Parquet, in a nutshell, is a more efficient data format for larger files. Using Parquet over CSVs will save you both time and money, as you can compare from the image given above. CSV is easy to use and is a widely accepted format. CSV files may be generated by a variety of apps, like Excel, Google Sheets, and a slew of others. You may even use your preferred text editor to produce CSV files. But as we know that everything has a cost, even your fondness for CSV files, especially if CSV is your main data processing type. Column-oriented databases, such as AWS Redshift Spectrum, and query services, such as AWS EMR (Apache Hive) or Amazon Athena, charge you based on the quantity of data scanned for each query. Many other services charge based on the amount of data searched. Thus, this is not unique to AWS. In a word, Parquet is a more efficient data format for bigger files. Using Parquet over CSVs will save you both time and money, as concluded from the above article.

Other useful articles:

- CSV and Where It Is Used

- Essential Secrets of CSV

- Writing CSV - Secrets of CSV

- A Real-World Example of CSV Usage with PDF.co Cloud API

- Change Default CSV Separator Using Windows Culture Settings

- Escape Characters - Secrets of CSV

- Manipulate CSV file content using JavaScript

- Real-World Example of CSV Usage with Document Parser Template Editor

- Where Large CSV Files are Available and Where They Are Used

- CSV vs Excel

- CSV vs TXT

- CSV vs JSON

- CSV vs Parquet